This Blog comprehensively overviews customer security responsibilities in AWS cloud environments. It outlines the key security components customers must configure, emphasizing the importance of implementing best practices for account management, identity, and access management, detective controls, network security, infrastructure security, data security, and incident response. Amazon Web Services provides a secure global infrastructure and services in the cloud. Security and compliance are shared responsibilities between AWS and the customer. AWS is responsible for “Security of the Cloud” and the customer is responsible for “Security in the Cloud.

The following are the AWS security components that the customer must configure as part of their security responsibility.

AWS Account Structure

Identity and Access Management

Detective Controls

Infrastructure Security

Data Protection

Incident Response

AWS Account Structure Strategy

While there’s no “one size fits all” solution, the best practice is to adopt a multi-account strategy to improve security posture, minimize blast radius, and facilitate easier cost management and reporting. Implement the model of a hub and spoke account design setup with peering between accounts. AWS Multi-Account strategy provides the agility, security, cost optimization, and cost allocation

AWS Organizations is a service that enables Amazon Web Services (AWS) to consolidate and centrally manage multiple AWS accounts. With AWS Organizations we can create accounts and invite existing accounts to join the organization. We can organize those accounts into groups and attach service policy-based controls. If we already have a consolidated Billing family of accounts, those accounts automatically become part of your organization.

There will be one AWS master billing account per organization. The typical AWS account structure for a business unit will have the following AWS sub-accounts.

● Non-production (Dev, QA, Staging, UAT)

● Production

● Shared-Services

● Audit/consolidated logging

● Security

AWS Master Billing Account: To help secure the AWS resources, the following are the recommendations for the AWS Organization Master Account.

● Use AWS organizations to centrally manage and enforce policies for multiple AWS accounts.

● Implement physical multi-factor authentication on the root account user

● Don’t provision any resources in the AWS master root account

● Create new AWS accounts or invite existing AWS accounts to join the master account

● Create a folder structure as organization units like business units under the root AWS master account and assign the AWS sub-accounts accordingly

● Create a Service control policy that will restrict at the sub-account level of granularity, what services and actions the users, groups, and roles in those accounts can perform.

● Created a few privileged IAM Users, and attached them to a group with the least privileged policies

● Design an SCP to restrict all users to disable cloud trail, and AWS config, and assign to all sub-accounts to maintain integrity, and accidental deletion and to increase overall security.

● Enabled Cloud trail, and AWS config logs in the master account and sent these logs to Audit/consolidated logging sub AWS Account.

● Grant Least Privilege access and then increment the SCP as required.

● Configure a Strong Password for the root master account

● Don’t create access keys and secret keys.

● Delete the default access keys and secret keys.

● Create a group distribution email list for alerts and event notifications.

● Enable consolidated billing

● Use the cost allocation tags for billing.

For billing purposes, AWS treats all the sub-accounts as if they were one account. Some services, such as Amazon EC2 and Amazon S3, have volume pricing tiers across certain usage dimensions that give us lower prices when we use the service more. With consolidated billing, AWS combines the usage from all accounts to determine which volume pricing tiers to apply, giving us a lower overall price whenever possible. AWS then allocates each linked account a portion of the overall volume discount based on the account’s usage.

AWS Sub Accounts: The following are the recommendations for the AWS Sub Accounts.

● Enable Cloud trail logs in all the sub-accounts

● Enable Config logs in all the sub-accounts

● Create a group distribution email list for alerts and event notifications.

● Delete the default access keys and secret keys.

● Rotate the Access keys regularly.

● Grant the least privileged access.

● Provision AWS resources in sub-accounts.

● Enforce tagging policy

Identity Access Management

AWS’s identity and access management (IAM) service allows customers to manage users, groups, roles, identities, and permissions. However, it’s entirely up to AWS customers to properly configure IAM to meet their security, audit, and compliance requirements. The following are the AWS Secure IAM best practices.

● Delete the root account access keys.

● Configure a strong password policy for all the users.

● Create IAM users, and groups with least privileged access.

● Use IAM policy conditions for Fine-Grained Access Control

● Rotate access keys regularly, try to avoid usage of access keys. Instead use IAM roles where AWS will automatically create, and rotate access keys regularly.

● Use SAML federated Single Sign-on (SSO) to integrate with On-premises Active Directory for authentication and use the AWS IAM roles for authorizing the AWS resources.

● Create day-one roles, cross-account roles, and service roles based on the organizational requirements.

● Implement physical multi-factor authentication for AWS root account users.

● Enable the virtual MFA for privileged users

● Leverage the AWS-managed policies.

● When required create customer-managed policies and Inline policies to attach to a specific user or a group.

● Use the security token service (STS) for temporary credentials

● Validate the status of various credentials using AWS credential reports

● Avoid storing API access keys in source control repositories.

Detective Controls (Logging and Monitoring)

Detective Controls provide security visibility, controllability, audibility, and agility. The Detective controls help us to identify a potential security threat or incident. They are an essential part of governance frameworks and can be used to support a quality process, a legal or compliance obligation, and for threat identification and response efforts. We can consider two key areas of detective controls

Consolidated centralized logging

Integrate auditing controls with alerts and notification

Consolidated Centralized logging

Consolidated logging collects logs into a centralized, dedicated logging audit account is an established best practice. It helps security teams to detect malicious activities both in real time and during incident response. Sending all the AWS Infrastructure logs from multiple accounts to a consolidated audit account where logs will be stored in an S3 bucket with centralized KMS encryption enabled for some of the AWS log services.

At a high level, there will be one centralized S3 bucket for each AWS log service in the logging/audit account, each account will use the same S3 bucket as the top-level folder but will have an account type as a sub-folder specified with the account ID.

The following are the AWS infrastructure logs services that must be enabled and configured in all AWS accounts.

· Cloudtrail logs

· AWS Config logs

· Elastic Load balancer logs

· VPC Flow logs

· S3 access logs

Integrate auditing controls with alerts and notifications

A best practice for building mature security operations is to deeply integrate the flow of security events and findings into security information and event management (SIEM) tools like Splunk, and ELK stack would not only aggregate, analyze, visualize, and perform alert notifications but also generates the information for reporting and auditing. Utilize third-party tools like pager duty, whisper e.t.c to automate messaging, voice, and email communications for security event notifications, and incident response.

Leveraging the following AWS native solutions will enhance the security, audit compliance, and operations standards.

Automate the response of CloudWatch events with lambda

Configure AWS Inspector for security defects or misconfigurations in operating systems and applications.

Utilize AWS Config rules with the lambda for change management, self-healing, and incident response

Amazon Guard Duty for threat detection service that continuously monitors for malicious or unauthorized behavior

Utilize the AWS Trusted Advisor for security monitoring

Infrastructure Security

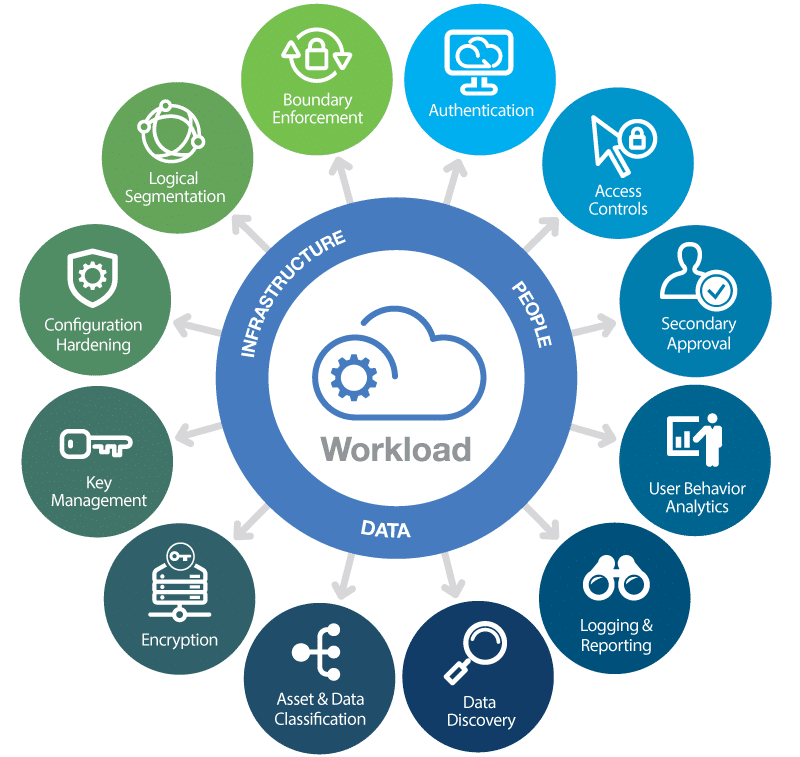

Incorporate security controls, and tools in all layers (proxy, web, app, and database) of Infrastructure. Infrastructure protection is a key part of an information security program. It ensures that systems and services within our workload are protected against unintended, unauthorized access and potential vulnerabilities.

Design a defense-in-depth strategy by utilizing the AWS cloud-native controls tools and third-party tools to protect the Infrastructure.

The following are the AWS cloud-native controls to protect the Infrastructure.

a) Network and host-level boundaries:

- Design the network topology, and isolation by using a virtual private cloud

- Delete the default VPC’s in all AWS regions

- Design the subnets based on application requirements like public, private, and VPN-only subnets.

- Configure inbound, and outbound NACLs to allow, and deny the IP traffic.

- Create security groups at layer levels like proxy-sg, elb-sg, web-sg, app-sg, and db-sg.

- Use the Security groups to and from other security groups

- Create a bastion host or jump box to protect the private instances.

- Only ELBs, bastion hosts, and edge firewalls would be hosted in the public subnet (proxy subnet). All other layers would be hosted in private subnets.

- Implement a custom policy for VPC endpoints.

- Use a Web application firewall to protect the web applications from common web exploits that could affect application availability, and compromise security.

- Leverage AWS shield to protect against distributed denial of service (DDoS)

- Use the AWS IPSEC encrypted VPN Gateways to connect to on-premises systems.

- Consistent, dedicated network connectivity from on-premises to AWS by using direct connect

b) Third-party tools for network protection:

In order to use network/host level protection, leverage third-party tools like Palo Alto next-gen firewall, Trend Micro e.t.c for IDP/IPS, data loss protection, threat vulnerabilities, anti-malware protection, and endpoint protection.

c) System security configuration and maintenance:

- Use AWS Systems Manager to automate the patch management process.

- Use AWS inspector to detect security findings for Common Vulnerabilities and Exposures, Center for Internet Security (CIS) Benchmarks, Security Best Practices, and Run-time Behavior Analysis

- Use infrastructure as a code to automate the infrastructure, and security using cloud formation, and terraform scripts.

- Use Amazon Data Lifecycle Manager (Amazon DLM) to automate the creation, retention, and deletion of snapshots taken to back up your Amazon EBS volumes

d) Service-level endpoint protection

- Define the IAM least-privilege methodology for service-level protection

- Use AWS KMS to define admin and user access.

- Use a combination of S3 bucket policies and IAM user policies

Data Protection

Data classification provides a way to categorize organizational data based on levels of sensitivity, and encryption protects data by way of rendering it unintelligible to unauthorized access. These methods are important because they support objectives such as preventing financial loss or complying with regulatory obligations.

In AWS, there are several different approaches to consider when addressing data protection. The following section describes how to use these approaches:

a) Data classification

- Data classification provides a way to categorize organizational data based on levels of sensitivity.

- Public data is unencrypted, non-sensitive available to everyone

- Critical data is encrypted, sensitive, requires authentication and authorization, and is not directly accessible from the internet.

- Using resource tags, IAM policies, AWS KMS, and AWS CloudHSM, we can define and implement our policies for data classification.

- Use Amazon Macie to discover, classify, and protect sensitive data through machine learning.

b) Protecting data in transit

- Data in transit is any data that gets transmitted from one system to another.

- AWS services provide HTTPS endpoints using TLS for communication, thus providing encryption in transit when communicating with the AWS APIs.

- Elastic Load Balancing supports secure protocols including HTTPS with ACM integration.

- Amazon CloudFront supports encrypted endpoints for our content distributions.

- VPN Connectivity to VPC provides the data in transit encryption.

c) Protecting data at rest

Data at rest represents any data that we persist for any duration. This includes block storage, object storage, databases, archives, and any other storage medium on which data is persisted. Protecting our data at rest reduces the risk of unauthorized access when encryption and appropriate access controls are implemented

The key AWS service that protects data at rest is AWS KMS, which is a managed service that makes it easy for us to create and control encryption keys

- AWS Services that supports inbuilt encryption are S3, EBS, RDS, DynamoDB e.t.c

- Use the SSE-C (server-side encryption with customer key)

- There is an option to encrypt the data locally before uploading it into the AWS service

d) Encryption/tokenization

Encryption is a way of transforming content in a manner that makes it unreadable without a secret key necessary to decrypt the content back into plain text

Tokenization is a process that allows you to define a token to represent an otherwise sensitive piece of information (for example, a token to represent a customer’s credit card number).

AWS CloudHSM is a cloud-based hardware security module (HSM) that enables us to easily generate and use our own encryption keys on the AWS Cloud. It helps you meet corporate, contractual, and regulatory compliance requirements for data security by using FIPS 140-2 Level 3 validated HSMs.

Incident Response

Even with extremely mature preventive and detective controls, our organization should still put processes in place to respond to and mitigate the potential impact of security incidents. The architecture of our workload strongly affects the ability of our teams to operate effectively during an incident, to isolate or contain systems, and to restore operations to a known good state. Putting in place the tools and access ahead of a security incident, then routinely practicing incident response through game days, will help us ensure that our architecture can accommodate timely investigation and recovery.

In AWS, there are several different approaches to consider when addressing incident response. The Clean Room section describes how to use these approaches.

- Use the Tags to quickly determine the impact and escalate

- Use AWS config rules with lambda to auto-recover from incidents.

- During an incident, the right people require access to isolate and contain the incident and then perform a forensic investigation to identify the root cause quickly.

- Use cloud API’s to automate and isolate the instance

- For incident investigation leverage cloud formation to quickly provision the clean infrastructure.

Conclusion

By adapting security at all levels which protect information, systems, and assets while delivering business value through risk assessments and mitigation strategies Security is an ongoing effort. When incidents occur they should be treated as opportunities to improve the security of the architecture. Having strong authentication and authorization controls, automating responses to security events, protecting infrastructure at multiple levels, and managing well-classified data with encryption provides defense in depth that every business should expect.

One response to “AWS Cloud Customer Security Best Practices”

Hi, this is a comment.

To get started with moderating, editing, and deleting comments, please visit the Comments screen in the dashboard.

Commenter avatars come from Gravatar.